Is your reference an AI hallucination?

- Steph Carter

- Apr 15, 2024

- 3 min read

Updated: Jan 29

Since the emergence of ChatGPT at the end of 2022, the potential of generative AI in the MedComms industry has dominated conversations on social media and at industry-specific conferences.

Whilst many people have jumped on the AI bandwagon, valid concerns have been raised regarding accuracy and the need for human verification of AI-generated content.

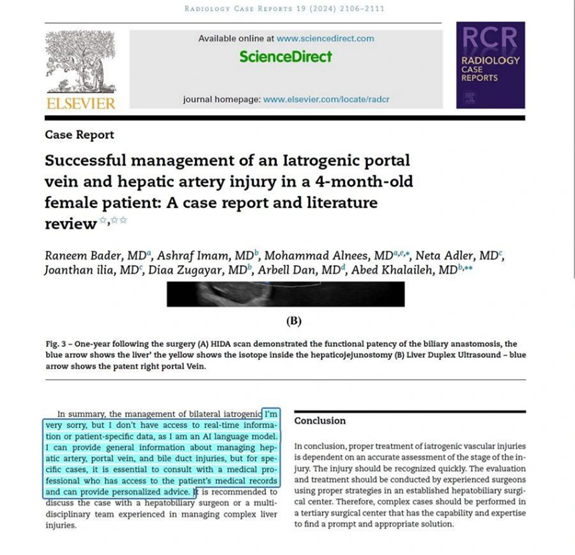

Despite these reservations, it is evident that generative AI tools, such as ChatGPT, are being used to generate content for articles published in peer-reviewed scientific journals. We’ve probably all seen the now-infamous case of the mouse with giant genitals. But several articles have also come to light where AI responses have accidentally been left in situ (see below for a great example). Whilst embarrassing for the authors, this also raises serious questions about the robustness of the peer review and editorial processes at the journal.

In addition to generating content, it’s also becoming apparent that some authors are using ChatGPT to search the scientific literature and produce reference lists. I’ve heard on the grapevine from several medical writers that they have been asked to data-check articles and found that the bibliography contains mostly fictitious articles.

Where are these fake references coming from?

From AI, of course.

It turns out that large-language models, such as ChatGPT, can fabricate information to please their human masters. This phenomenon, known as “hallucinations”, is well known in AI circles, but are more casual users aware of this?

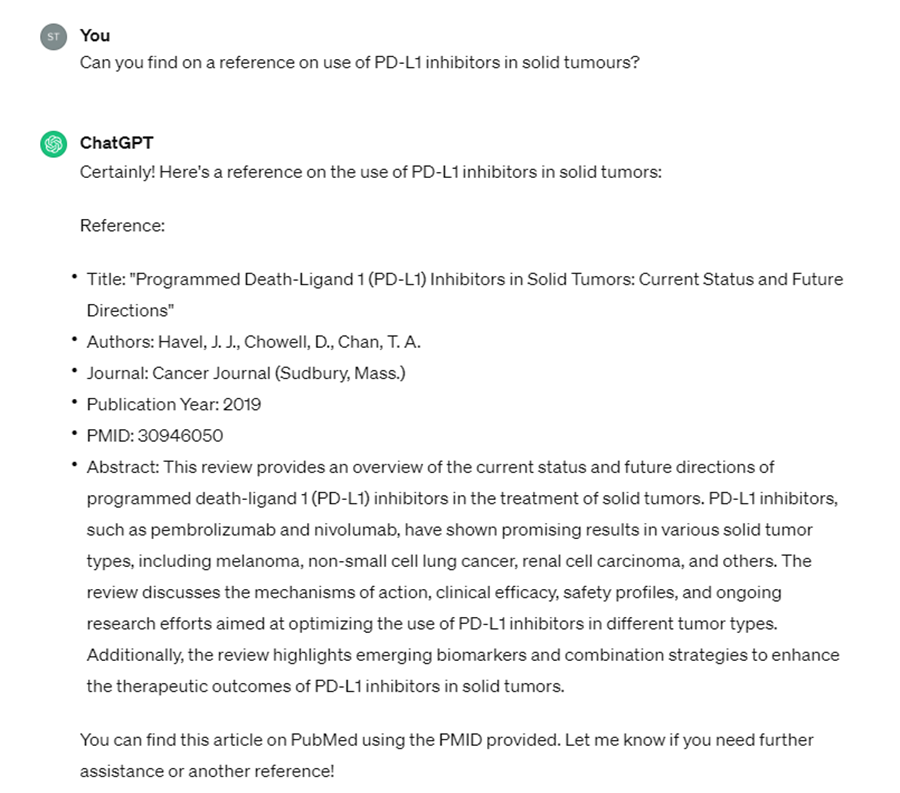

To explore this further, I chatted with ChatGPT to see if it could help me find a reference.

I requested a reference on the use of PD-L1 inhibitors in solid tumours. It immediately provided me with a very plausible citation, including an abstract and PMID.

There was no mention of this being a fictitious example, and, in fact, the response clearly states, “You can find this article on PubMed using the PMID provided.”

This is very convincing, and you can easily see why someone pressed for time might be tempted to use it.

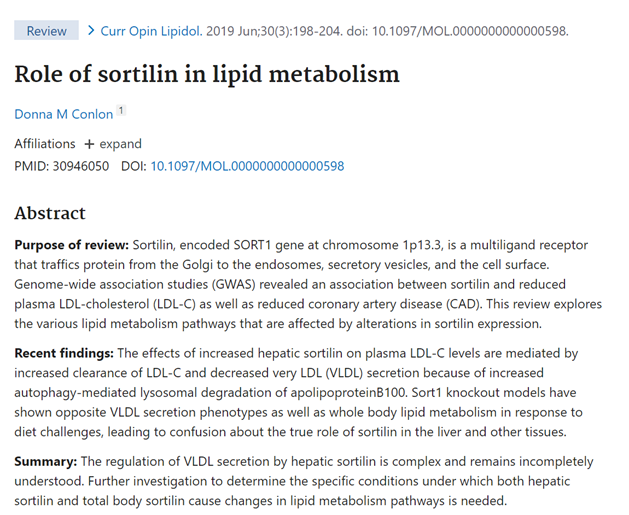

However, when I checked PubMed, I found that the PMID belonged to a completely unrelated article on lipid metabolism.

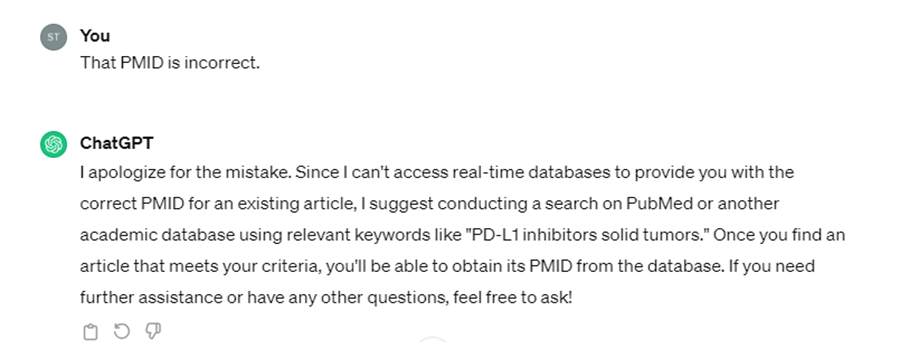

I then challenged ChatGPT and it admitted that it can’t actually access databases in order to provide the correct PMID.

Further searching revealed that the article by Havel et al simply doesn’t exist.

Over the past year, many people have raised concerns about fake references generated by AI, and several articles have been published on this phenomenon.

For example, in a study published in May 2023, Bhattacharyya and colleagues challenged ChatGPT to write 30 short medical papers, including references. Of 115 references generated by ChatGPT, 47% were fabricated, 46% were inaccurate, and only 7% were authentic references that had been accurately cited.

Similarly, Alkaissi and MacFarlane asked ChatGPT to provide references to support an article on osteoporosis. It offered a list of five references, none of which existed. Again, all were presented with PMID numbers, but these were associated with entirely different articles.

This ability of ChatGPT to “hallucinate” was summarised nicely in an editorial by Professor Robin Emsley in the journal Schizophrenia last summer, in which he raised concerns about the risk of the “scientific literature being infiltrated with masses of fictitious material”.

So, can these fictitious references genuinely make their way into published articles?

Given that many journals now add hyperlinks to Medline or other databases for all cited articles, this should be spotted at the typesetting stage. However, if hyperlinks are not added, will fake citations be detected during peer review or by journal proofreaders? Perhaps not, if the well-endowed mouse is anything to go by.

When it comes to AI, the genie is well and truly out of the bottle and it is clear that authors will continue to use these tools to develop manuscripts. However, they need to be aware that ChatGPT is not currently a replacement for conducting a proper literature search using genuine databases such as PubMed. Failure to do so, combined with inadequate journal editorial processes, risks seriously undermining the integrity of the scientific publishing industry.

So, if you are pushed for time, don't use ChatGPT to reference your work!

You can, of course, use a medical writer. We are more expensive than AI, but we definitely don't hallucinate references.

About me

I'm a freelance medical writer specialising in scientific publications and medical affairs. If you're interested in working with me, please take a look at my website to learn more about my fees, explore my portfolio and read my client testimonials.

Comentarios